If you regularly optimize your site, you must have already registered it in Google Search Console. Google Search Console will notify you of any type of errors (for example, the ones that could occur after significant technical changes, such as SEO audit implementation) by email. It is important to keep calm and be able to correctly interpret these data. How can you do it? We will discuss this issue in the current post.

First, I want to underline that Google Search Console is NOT a decision making system. There is plenty of data on GoogleBot crawling and indexing or on snippet performance on the SERP: average positions, snippets CTR and the amount of site's snippet views and clicks in Google Search Console. If you receive an email with a list of GoogleBot crawling mistakes on your site, that doesn't mean that everything is bad and you urgently need to restore these pages.

Google Search Console alerts you about all things that were found during the site crawling, but it can't tell you what decisions you should make.

Let's look through several real-life examples to show you what I am talking about.

Finding out what was changed

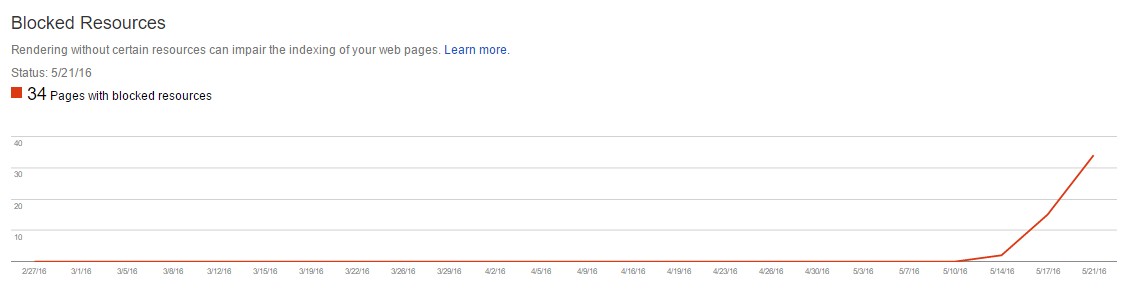

"Why there is a huge growth of blocked resources on my site? I haven't done anything".

Blocked resources aren't URLs that were blocked by the Search Engine. Those pages are blocked for crawling by your own robots.txt file. You need to understand how GoogleBot found links to blocked directory if it hadn't been able to find it before. Have you changed your robots.txt file? If not, check your links to the blocked pages in the source code, site's content, sitemap.xml URLs or backlinks from anywhere from the web. Depending on the link type you'll understand what points of those mentioned above should be checked first.

In the example, that was the xml sitemap issue.

Consider the whole picture

SEO audit has been implemented in one of the new projects.

I know what you think: "Oh, someone eventually blocked that site for crawling and all pages were gone from Google Index!" But what if that site has only 160 pages? Is it Okay that now it has 220 pages in Google SERP? And where have those 10 000 come from? The key phrase here was "project after SEO audit implementation". SEO audit shows us that there was a huge number of «alien» pages, because client's site was broken by someone else. Those pages were out of website CRM system and there were no internal links to them. Therefore, no one could see those pages when crawling the site. But how has GoogleBot found them? The malefactors added backlinks from other sites — ahrefs shows all of them. GoogleBot visited those pages by backlinks, crawled it and added to SERP. After the implementation of SEO audit the current pages have been deleted, so indexation diagram in GSC was plummeted – and now almost all «alien» pages are out from the SERP.

Track the history of changes

Website data analysis should be based on the history of occured project changes. Any type of crawling, errors and indexation dynamics is either the result of your made decisions or their absence.

What was your first impression when you saw the dynamics of errors growth on the site? It is unlikely that it made you happy. However, the following diagram doesn't stand for anything bad as well. Due to the technical problems the online store had, the logic of URL filters generation has been changed several times. But, as a result of joint efforts, all the references were led to a common mind. On the graph we can see the result — how GoogleBot gradually determines that all URL’s that contain the duplicate content are currently unavailable.

Conclusions

When starting to work with a new project, it is desirable to learn as much information about it as possible and conduct a SEO audit. For the long-term work — it is necessary to track all implemented changes and find out when they occurred and why. To restore chronological data of the project, you can use log in Google Tag Manager, notes in Google Analytics and tasks in task management systems (we at Netpeak use Planfix). We should also show how to use notes in Google Analytics — it is easy to use them and they will be useful at any stage of project development. Notes are not duplicating within GA views, so it's better to add them in the main view "Domain - All Web Site Data". How to check the required note or add a new one.

So don't panic during the Search Console data analysis and make informed decisions based on the history of the project’s changes. If you have any questions or examples, feel free to write them in the comments!

Related Articles

How to Link Google Ads to Google Analytics 4: A Step-by-Step Guide

Learn more about your target audience and improve your advertising campaigns by taking full advantage of Google’s tools.

Email Bounce Back: Strategies to Overcome Email Delivery Challenges

How do you deal with high bounce rates and make your campaign more effective? I will explain and show you tools for email verification

Text ASO and CRO Loop Strategy for vidby MeetUP: Boosting App Impressions by 3,018% and Installs by 138% in a Month